[updated 17 April 2017]

First we find a web search which produces the results we're after. This screenshot shows how that's done, and the url we want to grab as the starting url for our crawl.

That url goes into the starting url box in Webscraper.

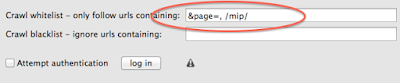

We obviously don't want the crawling engine to scan the entire site but we do want it to follow those 'More info' links, because that's where the detail is. We notice that those links go through to a url which contains /mip/ so we can use that term to limit the scan (called 'whitelisting').

We also notice the pagination here. It'll be useful if Webscraper will follow those links, to find further results for our search, and then follow the 'more information' links on those pages. We notice that the pagination uses "&page=" in its urls, so we can whitelist that term too in order to allow the crawler access to page 2, page 3 etc of our search results.

The whitelist field in Webscraper allows for multiple expressions, so we can add both of these terms (separated with a comma). Webscraper shouldn't follow any url which doesn't contain either of these terms.

** note a recent change to Webscraper's fields and my advice here - see the end of this article.

That's the setting up. If the site in question requires you to be logged in, you'll need to check 'attempt authentication' and use the button to visit the site and log in. That's dealt with in another article.

Kick off the scan with the Go button. At present, the way Webscraper works is that you perform the scan, then when it completes, you build your output file and finally save it.

If the scan appears to be scanning many more pages than you expected, then you can use the Live View button to see what's happening, and if necessary, stop and adjust your settings. You're very welcome to contact support if you need help

When the scan finishes, we're presented with the output file builder. I'm after a csv containing a few columns. I always start with the page url as a reference. That allows you to view the page in question if you need to check anything. Select URL and press Add.

Here's the fun part. We need to find the information we want, and add column(s) to our output file using a class or id if possible, or maybe a regular expression. First we try the class helper.

This is the class / id helper. It displays the page, it shows a list of classes found on the page, and even highlights them as you hover over the list. Because we want to scrape information off the 'more info' pages, I've clicked through to one of those pages. (You can just click links within the browser of the class helper.)

Rather helpfully, the information I want here (the phone number) has a class "phone". I can double-click that in the table on the left to enter it into the field in the output file builder, and then press the Add button to add it to my output file. I do exactly the same to add the name of the business (class - "sales-info").

For good measure I've also added the weblink to my output file. (I'm going to go into detail re web links in a different article because there are some very useful things you can do.)

So I press save and here's the output file. (I've not bothered to blur any of the data here, it's available on the web)

So that's it. How about getting a nice html file with all those weblinks as clickable links? That'll be in the next article.

** update - screen-scraping is a constantly-shifting thing... Recently yellowpages began putting "you may also be interested in...." links on the information pages - these links contain /mip/ and they aren't limited to the area that you originally searched for. Result - WebScraper's crawl going on ad infinitum.

What we needed was a way to follow the pagination from our original search results page, to follow any links through to the information pages, to scrape the data from those pages (and only those pages) but not follow any links on them.

So now Webscraper (as from v2.0.3) has a field below the 'blacklist' and 'whitelist' fields labelled 'information page:' A partial url in that box indicates to Webscraper that matching urls are to be scraped, but not parsed for more links. My setup for a yellowpages scrape looks like this and it works beautifully:

Once again, You're very welcome to contact support if you need help with any of this.

No comments:

Post a Comment