Like version 8, version 9 doesn't have any dramatic changes in the interface, so it'll be free for existing v7 or v8 licence holders. It'll remain a on-off purchase but there may be a small increase in price for new customers or upgraders from v6 or earlier.

But that's not to say that there aren't some important changes going on, which I'll outline here.

All of this applies to Integrity too, although the release of Scrutiny 9 will come first.

Inspectors and warnings

The biggest UI change is in the link inspector. It puts the list of redirects (if there are any) on a tab rather than a sheet, so the information is more obvious. There is also a new 'Warnings' tab.

Traditionally in Integrity and Scrutiny, a

link coloured orange means a warning, and in the past this meant only one thing - a redirect (which some users don't want to see, which is OK, there's an option to switch redirect warnings off.)

Now the orange warning could mean one or more of a number of things. While scanning, the engine may encounter things which may not be showstoppers but which the user might be grateful to know about. There hasn't been a place for such information. In version 9, these things are displayed in the Warnings tab and the link appears orange in the table if there are any warnings (including redirects, unless you have that switched off.)

Examples of things you may be warned about include more than one canonical tag on the target page, unterminated or improperly-terminated script tags or comments (ie <!-- with no --> which could be interpreted as commenting out the rest of the page, though browsers usually seem to ignore the opening tag if there's no closing one). Redirect chains also appear in the warnings. The threshold for a chain can now be set in Preferences. Redirect chains were previously visible in the SEO results with a hard-coded threshold of 3.

The number of things Scrutiny can alert you about in the warnings tab will increase in the future.

Strictly-speaking there's an important distinction between link properties and page properties. A link has link text, a target url and other attributes such as rel-nofollow. A page is the file that your browser loads, it contains links to other pages.

A 'page inspector' has long been available in Scrutiny. It shows a large amount of information; meta data, headings, word count, the number of links on the page, the number of links *to* that page and more. A lot of this is of course visible in the SEO table.

Whether you see the link inspector or the page inspector depends on the context (for example, the SEO table is concerned with pages rather than links, so a double-click opens the page inspector.) But when viewing the properties of a link, you may want to see the page properties. (In the context of a link, you may think about the parent page or the target page, but the target may more usually come to mind). So it's now possible to see some basic information about the target on the 'target' tab of the link inspector and you can press a button to open the full page inspector.

[update 26 May] The page inspector has always had fields for the number of inbound links and outbound links. Now it has sortable tables showing the inbound and outbound links for the page being inspected:

Rechecking

This is an important function that Integrity / Scrutiny have never done very well.

You run a large scan, you fix some stuff, and want to re-check. But you only want to re-check the things that were wrong during the first scan, not spend hours running another full scan.

Re-checking functionality has previously been limited. The interface has been changed quite recently to make it clear that you'll "Recheck this url", ie the url that the app found during the first scan.

Half of the time, possibly most of the time, you'll have 'fixed' the link by editing its target, or removing it from the page entirely.

The only way to handle this is to re-check the page(s) that your fixed link appears on. This apparently simple-sounding thing is by far the most complex and difficult of the v9 changes.

Version 9 still has the simple 're-check this url' available from the link inspector and various context menus (and can be used after a multiple selection). It also now has 'recheck the page this link appears on'. (also can be used with a multiple selection).

Reporting

This is another important area that has had less than its fair share of development in the past.

Starting to offer services ourselves has prompted some improvements here.

Over time, simple functionality is superseded by better options. "On finish save bad links / SEO as csv" are no longer needed and have now gone because "On finish save report" does those things and more. This led to confusion when they all existed together, particularly for those users who like to switch everything on with abandon. Checking all the boxes would lead to the same csvs being saved multiple times and sometimes multiple save dialogs at the end of the scan.

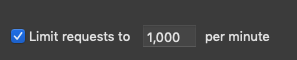

The 'On finish' section of the settings now looks like this. If you want things saved automatically after a scheduled scan or manual 'scan with actions' then switch on 'Save report' and then choose exactly what you want included.

Reduced false positives

The remaining v9 changes are invisible. One of those is a fundamental change to the program flow. Previously link urls were 'unencoded' for storage / display and encoded for testing. In theory this should be fine, but I've seen some examples via the support desk where it's not fine. In one case a redirect was in place which redirected a version of the url containing a percent-encoding, but not the identical url without the percent-encoding. The character wasn't one that you'd usually encode. This unusual example shows that as a matter of principle, a crawler ought to store the url exactly as found on the page and use it exactly as found when making the http request. 'Unencoding' should only be done for display purposes.

When you read that, it'll seem obvious that a crawler should work that way. But it's the kind of decision you make early on in an app's development, and tend to work with as time goes on rather than spending a lot of time making fundamental changes to your app and risking breaking other things that work perfectly well.

Anyhow, that work is done, it'll affect very few people, but for those people it'll reduce those false positives. (or 'true negatives' whichever way you want to look at a link reported bad that is actually fine.)

[update 23 May 2019] I take it back, possibly the hardest thing to do was to add the sftp option (v9 will allow ftp / ftp with TLS (aka ftps) / sftp) for when automatically ftp'ing sitemap or checking for orphaned pages.

update 10 Jun: A signed and notarized build of 9.0.3 is available. Please feel free to run it if you're prepared to contact us with any problems you notice. Please wait a while if you're a production user - ie if you already use Scrutiny and rely on it.

update 19 Jun: v9 is now the main release, with 8.4.1 still available for download.