[Edit 18 Dec - beta made public, available from website]

[Edit 10 Mar 2019 - version 2 made public]

[Important note 17 Mar 2019: Name of app has had to be changed slightly from Watchman to Website Watchman because of a conflict with a Linux open source title]

We kick off quite a few experimental projects. In most cases they never really live up to the original vision or no-one's interested.

This is different. It's already working so beautifully and is proving indispensable here, I'm convinced that it will be even more important than Integrity and Scrutiny.

So what is it?

It monitors a whole website (or part of a website, or a single page) and reports changes.

You may want to monitor a page of your own, or a competitor's or a supplier's, and be alerted to changes. You may want to simply use it as a 'time machine' for your own website and have a record of all changes. There are probably use-cases that we haven't thought of.

You can easily schedule an hourly, daily, weekly or monthly scan so that you don't have to remember to do it, and the app doesn't even need to be running, it'll start up at the scheduled time.

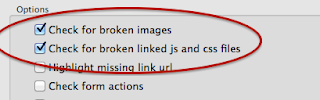

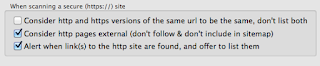

Other services like this exist. But this is a desktop app that you own and are in control of. It goes very deep. It can scan your entire site, with loads of scanning options just like Integrity and Scrutiny, plus blacklisting and whitelisting of partial urls. It doesn't just take a screenshot, it keeps its own record of every change to every resource used by every page. It can display a page at any point in time - not just a screenshot but a 'living' version of the historic page using the javascript & css as it was at the time.

It allows you to switch between different versions of the page and spot changes. It'll run a comparison and highlight the changes in the code or the visible text or resources.

It stores the website in a web archive, you can export any version of any page at any point in time as a screenshot image or a collection of all of the files (html, js, css, images etc) involved in that version of that page.

The plan was to release this in beta in the New Year. But it's already at the stage where all of the fundamental functionality is in place and we're using it for real.

If you're at all interested in trying an early version and reporting back to us, you can now download the beta from the website. [Edit: version 1 is now the stable release and is free, version 2 is also free and is in beta]

The working title has been Watchtower, but it won't be possible to use that name because of a clash with the 'Watchtower library' and related apps. It'll likely be some variation on that name.