On today's computers I'm surprised that this isn't dealt with at a high or low level. An attempt to divide by zero can crash an app and it's surprisingly easy to do. Should it be necessary for a developer to have their wits about them every time they perform a division using a divisor that is a variable? And have to write an extra line to check that that variable is not set to zero?

It would perhaps be more elegant if the processor returned the largest possible number (INT_MAX or FLT_MAX etc depending on the type of the divisor). Yes, that's the wrong answer, but we're working with approximations all the time - for example,

float f = 1.0;

if (f == 1){

// unlikely to be executed because f as a float isn't exactly 1 - it has a 'precision',

// in terms of its bits it'll probably be 0.999999999999 or something

}

There are some very interesting philosophical points in Dave Williamson's answer. Such as the fact that infinity isn't a number. Zero is more generally considered a number, but that is questionable.

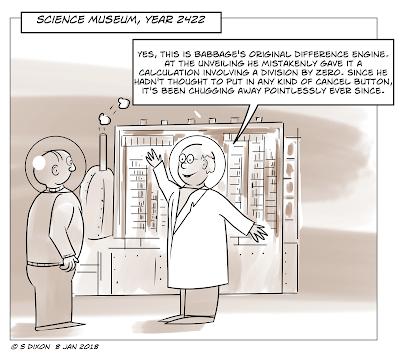

All of this inspired me to draw this:

No comments:

Post a Comment